Edge AI is rapidly expanding in consumer electronics. Specialized IC components for Edge AI in Consumer Electronics are the engine driving these next-generation devices. Delivering powerful AI capabilities directly on devices demands extreme power efficiency. Traditional processors often lack the necessary efficiency for this task, creating a significant power challenge for product developers. Specialized AI chips provide the solution, optimizing for on-device AI performance. This blog explores how these IC components achieve their incredible energy efficiency and examines what this means for future consumer tech devices, as their design prioritizes low power consumption.

Key Takeaways

-

Edge AI devices need special chips. These chips help devices do smart tasks without using too much power.

-

Traditional computer chips are not good for Edge AI. They use too much energy for tasks like facial recognition.

-

New chips called NPUs are made for AI. They help devices work faster and save battery life.

-

Software also helps save power. It makes AI programs smaller and smarter, so they use less energy.

-

Advanced ways to build chips make them even better. They help devices run AI tasks quickly and efficiently.

Edge AI’s Power Challenge: Demanding Efficiency

The Energy Cost of AI Workloads

AI workloads require significant computational resources. Deep learning models, for example, involve millions of calculations. This translates directly into high energy consumption. For battery-operated consumer electronics, this presents a major challenge. Devices like smartphones, smartwatches, and AR/VR headsets rely on long battery life. High energy use from AI tasks quickly drains the battery. Therefore, power semiconductors are essential. They manage and optimize the flow of electrical power within these devices. This ensures that AI processing does not compromise battery longevity.

Limitations of Traditional Processors

Traditional processors, such as general-purpose CPUs and GPUs, are not optimized for AI tasks. CPUs excel at sequential processing. GPUs handle parallel tasks well, but their architecture is broad. They are designed for graphics rendering and general-purpose computing. When these processors run AI models, they often consume excessive power. They lack the specialized hardware needed for efficient AI computations. This leads to poor performance per watt. Their design does not prioritize the specific demands of AI. This makes them unsuitable for many battery-bound edge devices.

The Imperative for On-Device Processing

On-device AI processing is crucial for several reasons. It offers enhanced privacy. Local data processing on the device reduces the necessity of sending sensitive information to remote servers. This improves user privacy. It also reduces latency. Executing AI models directly on the device leads to quicker response times. Data does not need to travel to and from a server for processing. This is particularly advantageous for real-time applications. Furthermore, it provides offline functionality. On-device AI enables applications to operate even without an internet connection. This boosts reliability and accessibility in locations with limited or no connectivity. These benefits make on-device AI a necessity for modern consumer devices. It requires specialized ai chips with excellent power efficiency. This also involves careful power management and balancing power-performance tradeoffs. Many iot edge devices depend on this approach. These specialized ic components for edge ai in consumer electronics are vital.

Hardware-Software Co-Design for Power Efficiency

Achieving optimal power efficiency in Edge AI devices requires a close partnership between hardware and software design. This approach, known as hardware-software co-design, ensures that every component works together to minimize energy consumption while maximizing performance. It is a critical strategy for developing power-efficient architecture in modern consumer electronics.

Specialized AI Accelerators: NPUs and SoCs

Specialized AI accelerators are at the heart of energy-efficient computing for Edge AI. These dedicated processors handle AI workloads much more efficiently than general-purpose CPUs or GPUs. Neural Processing Units (NPUs) are a prime example. They are specifically designed for the parallel computations common in neural networks. Many modern devices integrate these specialized AI chips.

For instance, Apple iPhones use Apple’s Neural Engine within their Apple silicon. Google Pixel smartphones feature the Google Tensor chip, and Huawei smartphones also incorporate dedicated AI hardware. Qualcomm and Samsung processors also include specialized AI capabilities. In the computer world, Intel’s Meteor Lake processors have a built-in Versatile Processor Unit (VPU), and AMD includes AI engines in its Versal series. Apple silicon also integrates AI acceleration.

Most consumer applications, such as smartphones, mobile devices, and laptops, combine the NPU with other coprocessors on a single semiconductor microchip. This integrated circuit is known as a System-on-Chip (SoC). This SoC design allows for tight integration and optimized data flow, which significantly boosts performance per watt.

Beyond digital accelerators, chip designers explore creative new solutions like power-efficient analog compute. Mythic’s analog computing technology enhances power efficiency by storing AI parameters directly within the processor. This design eliminates memory bottlenecks, a common issue in digital computing architectures. It enables more efficient AI applications. The Mythic MM1076 chip, for example, consumes 3.8 times less power compared to an industry-standard AI inference digital chip. This comparison was independently tested for batch-1 yolov8s at 1408×1408. The M1076 AMP utilizes the Mythic Analog Compute Engine (Mythic ACE™), which integrates a flash memory array and Analog-to-Digital Converters (ADCs). This integration allows for storing model parameters and performing low-power, high-performance matrix multiplication directly within the chip. This architecture enables the deployment of powerful AI models without the typical challenges of high power consumption and thermal management. The M1076 AMP delivers GPU-level compute resources at up to 1/10th the power of other embedded AI processors and 1/100th the power of a GPU. This innovative approach demonstrates the ongoing optimization in AI chips for better energy use.

Dynamic Voltage and Frequency Scaling (DVFS)

Dynamic Voltage and Frequency Scaling (DVFS) is a crucial power management technique. It intelligently adjusts the voltage and frequency of processors based on current workload requirements. This mechanism ensures the system consumes only the necessary amount of power. It conserves energy when full computational power is not required.

DVFS achieves significant power savings. In scenarios with low utilization, DVFS can reduce dynamic power usage by 40-70%. Furthermore, voltage scaling can improve leakage power by 2-3 times. These savings can extend battery life in embedded systems and laptops by up to 3 times during periods of low activity. This dynamic adjustment helps manage power-performance tradeoffs effectively, ensuring devices remain responsive while conserving battery.

Clock and Power Gating Techniques

Clock gating and power gating are two fundamental power-saving features in IC design. They reduce unnecessary power consumption in various devices.

Clock gating is a technique that turns off the clock signal to specific parts of a digital design when they are not needed. It is a simple and widely used method to reduce dynamic power consumption in SoCs with tight power budgets. By turning off the clock, switching activity reduces, thereby lowering dynamic power. Clock gating can apply from a single flip-flop to entire subsystems or SoCs. It reduces dynamic power by stopping the clock to registers that maintain the same logic value. This technique avoids unnecessary switching in registers. It is suitable for registers that hold the same value over multiple clock cycles, saving power consumed by re-triggering the register each cycle.

Power gating disconnects power to circuit parts that are not in use. This technique can be implemented at the SoC level or using discrete components like load switches or MOSFETs. It conserves energy by reducing both leakage (static) and dynamic power when specific blocks are idle. Power gating aims to switch off entire circuits when not in use. It may require extra functionality like retention flops to retain state during power-down and isolation cells to separate powered-down parts from active ones. Power gating is increasingly common in smaller technology nodes where static power is a significant concern. Clock gating primarily targets dynamic power by reducing switching frequency, while power gating focuses on static/leakage power by reducing current flow. These techniques are vital for extending battery life in iot edge devices and other consumer electronics.

Software Optimizations for Edge AI Power Management

Software plays a crucial role in managing power for Edge AI. It works with hardware to reduce energy use. These software strategies ensure AI features run efficiently on devices. This helps extend battery life and improves overall performance.

Model Compression: Pruning and Quantization

Model compression techniques reduce the size and complexity of AI models. This lowers the computational power needed for inference. Pruning removes less important connections or neurons from a neural network. Quantization reduces the precision of the numbers used in the model. For example, it changes 32-bit floating-point numbers to 8-bit integers. This significantly reduces memory and computation requirements.

Pruning significantly reduces model size, sometimes by as much as 30–50%. In some cases, it reaches up to 90% without a notable loss in accuracy. However, if too many parameters are removed, especially beyond the 30–50% range, model performance can take a significant hit. Quantization, on the other hand, can shrink model size by as much as 4x. It cuts inference latency by up to 69%. Studies suggest that quantization frequently delivers better efficiency without compromising too much on accuracy. Reducing precision can degrade a model’s performance, especially for complex tasks. Combining both techniques can lead to even greater compression and speed improvements. This achieves up to 10 times smaller models. Over-optimizing can lead to accuracy issues or hardware compatibility problems. These methods are key for model optimization and reducing energy consumption.

Power-Aware Task Scheduling

Power-aware task scheduling intelligently manages when and how AI tasks run. It considers the device’s current power state and available battery. The scheduler prioritizes critical tasks. It delays less urgent ones. It also adjusts the processing speed based on the workload. For instance, a smartphone might run an AI task at a lower frequency when the battery is low. This reduces power consumption. This dynamic approach ensures optimal use of available power. It balances performance with energy savings.

Efficient Data Movement and Memory Access

Moving data between memory and the processor consumes significant energy. Efficient data movement minimizes this energy cost. It involves optimizing data structures and access patterns. This reduces the number of times data moves. Specialized memory architectures also play a part. They offer high bandwidth and low latency. This is crucial for AI workloads.

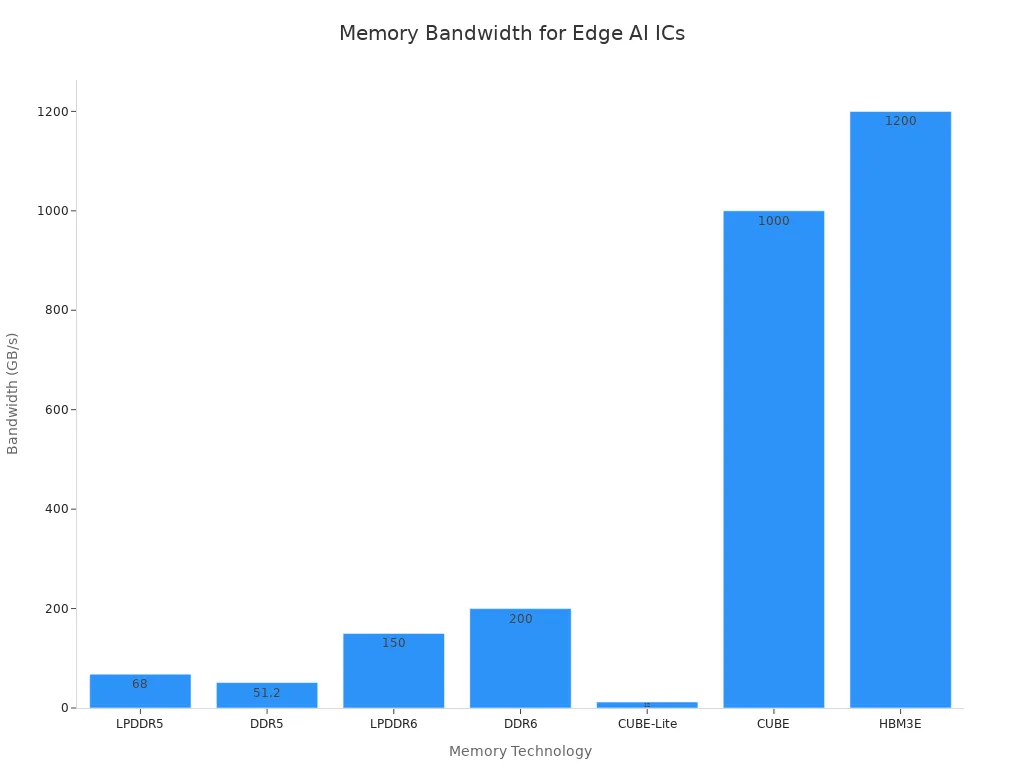

The chart below shows memory bandwidth for various technologies. It highlights the need for fast memory in AI devices.

Current AI smartphones use LPDDR5, but it faces bottlenecks. LPDDR6, projected by 2026, aims to address these challenges. Technologies like CUBE-Lite offer low power and high bandwidth for TinyML devices. Efficient memory design directly impacts the overall power budget of AI devices. This optimization is vital for sustained performance.

Dynamic Power Management for Adaptive AI

Dynamic power management is crucial for Edge AI. It allows devices to adapt their power usage in real-time. This ensures efficient operation and extends battery life. These strategies help maintain high performance while minimizing power.

Event-Driven Architectures

Event-driven architectures are key for saving power in Edge AI. They process data only when specific events happen. This avoids continuous processing, which wastes energy. For example, a smart camera only processes video when it detects motion. This event-triggered inference significantly boosts efficiency. It drastically lowers the duty cycle. This approach reduces bandwidth usage through intelligent event filtering. It also lowers bandwidth expenses. Furthermore, it optimizes resource utilization across compute infrastructure. This method helps devices conserve battery power.

State Machines for Granular Control

State machines offer granular control over power management. They define different operational states for a device. Each state has specific power settings. For instance, a device might have “sleep,” “idle,” and “active” states. The system transitions between these states based on tasks and user activity. This allows for precise power management strategies. Engineers design these state machines to optimize power for various scenarios. This careful design helps extend the battery life of consumer devices.

Adaptive Workload Distribution

Adaptive workload distribution optimizes power across different processing units. Edge AI devices often have various processors, like CPUs, NPUs, and DSPs. The system dynamically assigns tasks to the most power-efficient processor for that specific job. EdgeCortix’s MERA software, for example, uses a smart compiler and runtime. It deploys and executes across a mix of processors. It selects the best combination for each AI application. MERA monitors workload and environmental conditions. It dynamically adjusts configurations. This includes partitioning deep neural networks for optimal distribution.

The Synaptics Astra SL series also uses heterogeneous computing. It dynamically allocates tasks to NPUs, DSPs, or MCUs. This ensures AI workloads process with minimal power. This approach balances energy efficiency with high performance. It is crucial for battery-powered devices. Similarly, the Renesas RZ/V2H and Arm Neoverse/Cortex-X chipsets prioritize adaptive power scaling. They adjust power consumption based on workload demands. This ensures continuous power optimization. This power-optimized workload management is vital for modern iot edge devices.

IC Components for Edge AI: Power-Efficient Architecture

Specialized ic components for edge ai in consumer electronics drive the next wave of intelligent devices. These components require careful architectural choices to deliver high performance with minimal power. This section explores the fundamental aspects of creating power-efficient platform architecture for Edge AI.

Designing Power-Efficient SoCs

Designing power-efficient Systems-on-Chip (SoCs) for Edge AI involves a holistic approach. This approach starts with early power optimization. It integrates hardware and software from the beginning. This foundational method, called hardware-software co-design, ensures software uses the hardware’s power-saving features. Key hardware elements include Dynamic Voltage and Frequency Scaling (DVFS), clock and power gating, and specialized accelerators like GPUs, TPUs, and NPUs. Software manages these features through power-aware scheduling and real-time monitoring. It also uses well-crafted firmware and drivers.

The machine learning model itself significantly impacts power use. Model optimization is crucial. This involves using efficient architectures such as MobileNets, EfficientNets, and TinyML algorithms. Edge-optimized frameworks like TensorFlow Lite and PyTorch Mobile also help. These ensure models run complex inferences on microcontrollers with very little power.

Dynamic system power management maximizes time spent in low-power sleep states. This involves designing event-driven, sleep-centric architectures. These architectures use duty cycling and event-driven processing. Well-defined state machines (Active/Run, Idle, Standby/Sleep, Charging, Low Battery) control transitions between power states. This ensures energy is used only when necessary. It also optimizes for the energy cost of transitions.

Choosing power-efficient hardware and software platforms is also vital. ARM-based processors, especially those with ARM big.LITTLE technology, are widely adopted. They combine high-performance ‘big’ cores with high-efficiency ‘LITTLE’ cores. This offers excellent performance per watt. True optimization involves porting software to these architectures. It addresses compatibility, restructures build pipelines, and fine-tunes every layer for efficiency. This comprehensive silicon-to-systems design ensures optimal power efficiency.

Optimized Chip Designs for Power Efficiency

Optimized chip design plays a critical role in achieving high power efficiency for Edge AI. Application-Specific Integrated Circuits (ASICs) are custom-designed ai chips. They accelerate neural network models. Google’s Edge TPU is a prime example. It provides fast and efficient AI inference on edge devices. ASICs offer high efficiency in power consumption and performance. Their fixed architecture is tailored to maximize efficiency for specific AI inference tasks. This provides minimal power and maximum speed.

Memory optimization is crucial for maximizing bandwidth on hardware accelerators. FPGAs and ASICs use specialized memory hierarchies. These require careful memory planning. This ensures efficient data access and processing. It enhances overall inference performance. Techniques like quantization reduce the precision of model weights and activations. Pruning decreases unnecessary weights in neural networks. These methods are highly effective on ASICs and FPGAs. They drastically improve inference speed and reduce power consumption. They benefit from the reduced model complexity due to the fixed or reconfigurable resources of these specialized ai chips.

Application-Specific Instruction-set Processors (ASIPs) represent a trend in heterogeneous multicore SoCs. They feature specialized processor cores. Their architectures and instruction sets are optimized for particular applications. ASIPs combine software programmability with application-specific hardware components. This allows for high flexibility and high performance efficiency. They offer high throughput and low energy consumption. Their instruction-set architecture (ISA) is tailored for efficient implementation of selected functions. It provides enough flexibility for software changes. This architectural specialization, along with instruction-level and data-level parallelism, enables ASIPs to achieve superior performance and energy characteristics. They approach the efficiency of fixed-function custom logic. These are essential for iot edge devices.

The Role of Advanced Packaging

Advanced packaging technologies significantly improve power efficiency and performance in Edge AI ICs. High Bandwidth Memory (HBM) is a key innovation. It stacks memory vertically and places it close to the GPU. This reduces latency, boosts data transfer speeds, and lowers power consumption. Interposers and substrates facilitate efficient communication between components. They integrate GPUs and HBMs into a single AI chip package.

Innovations like hybrid bonding, embedded bridges, and various interposers increase interconnect density. This shortens signal paths. It boosts bandwidth and reduces power loss. This is critical for AI workloads. These advancements help overcome several challenges. They address the “memory wall” through HBM. They tackle I/O and communication issues by reducing latency using chiplet and interposer technologies. They also mitigate “power” and “thermal walls” through improved power efficiency and thermal power management.

Advanced packaging technologies enhance power efficiency and performance in Edge AI ICs. They enable compact, powerful AI accelerators. They achieve this through co-packaging logic and memory with optimized interconnects. This supports real-time decision-making in edge devices with minimal power draw. These techniques also introduce modular, heterogeneous integration. They use chiplets and stacked dies for optimal performance and power usage. This moves beyond traditional single-die systems. Specifically, 3D integration stacks dies vertically using Through-Silicon Vias (TSVs). This provides ultrafast signal transfer and reduced power consumption. This is crucial for memory-intensive AI applications. It helps overcome the ‘memory wall’ challenge. Chiplets further contribute by allowing different parts of a chip to be built with varying process nodes. This improves efficiency and design flexibility.

We explored key strategies for power-efficient IC design in Edge AI. This involved a synergy between hardware innovation and software optimization. Specialized ai chips, including NPUs, AI accelerators, and heterogeneously architected SoCs, serve as the engine for the Edge AI revolution in consumer electronics. These ic components for edge ai in consumer electronics enable powerful on-device AI. Future trends project a 100 to 1,000-fold reduction in power consumption per AI task for on-device AI compared to cloud solutions. This advancement enables new form factors and capabilities for devices. For example, Renesas’ RA8P1 microcontrollers integrate AI-acceleration and NPUs, enabling advanced robotics and face recognition. This intelligent chip design will power next-generation consumer devices.FAQ

What is Edge AI?

Edge AI processes data directly on a device. It does not send data to a central cloud server. This approach improves privacy and reduces latency. It also allows devices to work offline.

Why is power efficiency crucial for Edge AI?

Many Edge AI devices run on batteries. High power consumption drains batteries quickly. Power-efficient ICs ensure devices can perform AI tasks for longer. This extends battery life for users.

How do specialized ICs make Edge AI more power-efficient?

Specialized ICs, like NPUs, are designed for AI tasks. They perform AI computations with much less energy than general-purpose processors. This dedicated design boosts efficiency significantly.

What is hardware-software co-design in Edge AI?

Hardware-software co-design means engineers develop hardware and software together. This ensures they work perfectly to save power. It optimizes performance while minimizing energy use.

Can you name some consumer devices that use these specialized ICs?

Smartphones, smartwatches, and AR/VR headsets use these ICs. Laptops and smart home devices also benefit. They enable features like voice assistants and facial recognition.

See Also

RV1126: Driving Advanced AI Edge Computing for Robotic Innovations

MC9S12XEQ512CAL: Versatile Applications in Automotive to Industrial Control Systems

SPC56 Microcontrollers: Essential for Automotive Powertrain Control and Performance Mastery

NXP Microcontrollers: Powering Automotive Electronics with Core Chip Technology

MC9S12DJ256MFUE: Comprehensive Exploration of Automotive Electronics’ Central Pillar