Combining Unity with the ESP32 microcontroller offers a powerful foundation for building a drone simulator. Unity provides a versatile platform for crafting immersive virtual environments, while the ESP32 microcontroller enables real-time data processing and hardware integration. Peripherals play a critical role in enhancing the simulation experience by adding realism and interactivity.

-

The growing popularity of esports and online gaming communities has driven demand for high-quality peripherals.

-

Amateur and professional gamers alike are investing in advanced controllers, sensors, and other hardware to improve their performance.

-

Technological advancements in peripherals, such as precise sensors and responsive joysticks, are further transforming simulation projects.

This synergy between software, hardware, and peripherals ensures that drone simulation projects deliver both educational and practical value.

Key Takeaways

-

Unity is a strong tool to make drone simulations. It helps developers create realistic flight movements and weather effects.

-

The ESP32 microcontroller makes simulators better by handling data quickly and connecting to different parts easily.

-

Adding sensors like gyroscopes and accelerometers is important. These sensors help show how drones move during flights.

-

Using Wi-Fi and Bluetooth helps Unity and ESP32 share data well. This makes the simulator work faster and smoother.

-

ESPHome makes connecting hardware parts simple. Developers can link sensors and controllers without writing hard code.

Overview of Unity and the ESP32 Microcontroller

Unity as a platform for drone simulation

Unity serves as a robust platform for developing drone simulators due to its advanced features and flexibility. Its accurate flight physics engine models complex aerodynamics, enabling realistic simulations of various drone configurations. Developers can leverage Unity’s customizable virtual environments to create diverse scenarios, such as mountainous terrains or stormy weather conditions, for testing drones under different circumstances.

The platform also supports advanced AI integration, which allows users to test autonomous flight behaviors in a controlled virtual space. Robust data analysis tools further enhance its effectiveness by enabling the tracking and visualization of performance metrics like flight dynamics and energy consumption. These features make Unity an ideal choice for crafting immersive and functional drone simulation experiences.

|

Feature Description |

Contribution to Effectiveness |

|---|---|

|

Advanced AI Integration |

Enables testing and fine-tuning of autonomous flight behaviors |

|

Robust Data Analysis Tools |

Tracks and visualizes performance metrics |

|

Accurate Flight Physics Engine |

Models aerodynamics and supports diverse drone configurations |

|

Customizable Virtual Environments |

Facilitates scenario creation for testing |

Features and capabilities of the ESP32 microcontroller

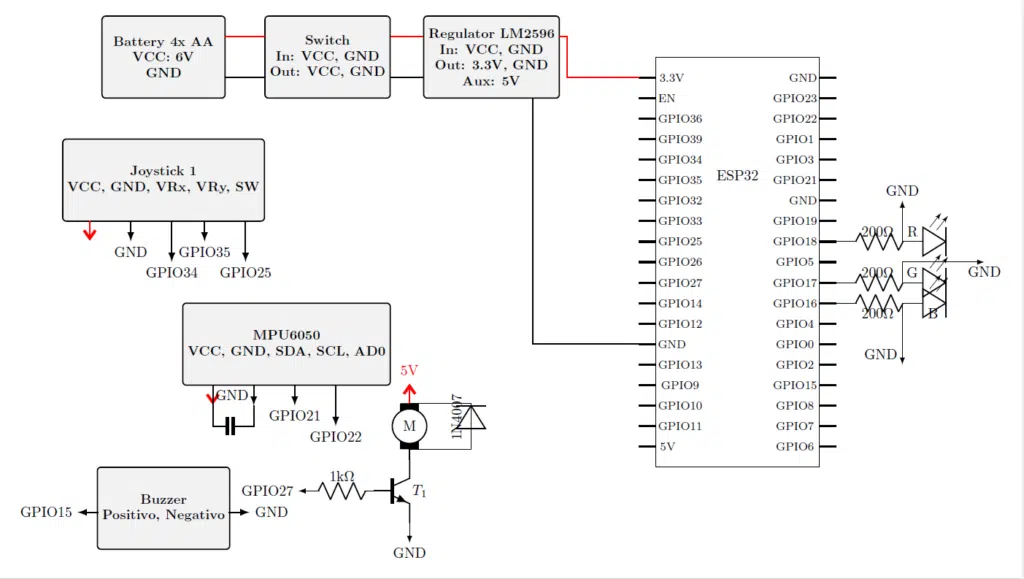

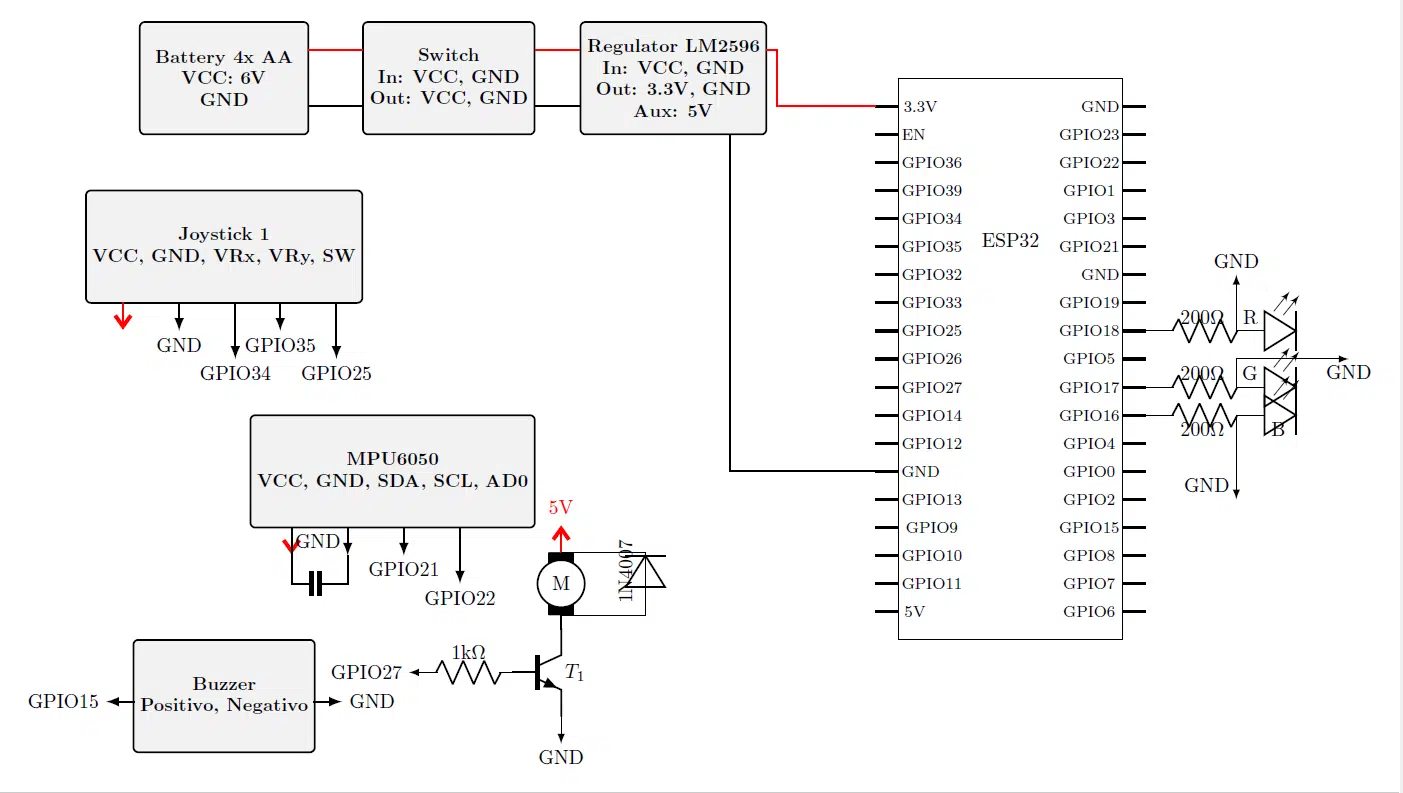

The ESP32 microcontroller offers powerful technical specifications that support real-time simulation environments. Its dual-core Xtensa® 32-bit LX6 processor operates at a clock speed of up to 240 MHz, ensuring efficient data processing. With 4 MB of flash memory and 520 KB of SRAM, the ESP32 handles memory-intensive tasks with ease.

The microcontroller includes Wi-Fi (802.11 b/g/n) and Bluetooth v4.2 BR/EDR and BLE capabilities, enabling seamless communication with external devices. Its GPIO pins provide versatile connectivity options for peripherals like sensors and controllers. These features make the ESP32 a reliable choice for drone simulation projects requiring real-time data acquisition and processing.

|

Specification |

Details |

|---|---|

|

Processor |

Dual-core Xtensa® 32-bit LX6 microprocessor |

|

Clock Speed |

Up to 240 MHz |

|

Flash Memory |

4 MB (varies by model) |

|

SRAM |

520 KB |

|

Wi-Fi |

802.11 b/g/n |

|

Bluetooth |

v4.2 BR/EDR and BLE |

|

Operating Voltage |

3.3V |

|

GPIO Pins |

34 (multipurpose) |

Integration possibilities between Unity and ESP32

The integration of Unity and the ESP32 microcontroller opens up exciting possibilities for drone simulation projects. Unity’s software capabilities complement the ESP32’s hardware features, enabling seamless communication between virtual environments and physical devices. Developers can use the ESP32 to process real-time data from sensors, such as gyroscopes and accelerometers, and relay this information to Unity for visualization and analysis.

This integration supports interactive simulations where users can control drones using peripherals like joysticks or controllers. Additionally, the ESP32’s Wi-Fi and Bluetooth capabilities facilitate wireless communication, allowing developers to create dynamic and responsive simulation setups. Projects like MetavoltVR demonstrate the potential of combining Unity and microcontrollers to enhance educational and interactive experiences.

|

Project Name |

Students |

Mentors |

Summary |

|---|---|---|---|

|

MetavoltVR |

Nicholas Amely, Jesus Leyva |

Dr. Wei Wu |

A VR application for circuit building developed using Unity, aimed at enhancing engineering education through interactive learning. |

Key Components of a Drone Simulator

Realistic physics and flight dynamics

Realistic physics and flight dynamics form the backbone of any effective drone simulator. Accurate modeling of aerodynamics, wind behavior, and environmental factors ensures that the simulation mirrors real-world conditions. Developers often incorporate advanced physics engines to simulate forces like lift, drag, and thrust, which are essential for understanding drone behavior during flight.

Simulators such as DroneWiS have demonstrated the importance of realistic physics through experimental comparisons. For instance, DroneWiS accurately replicates wind effects, while other simulators like AirSim fail to account for obstacles, resulting in smoother but less realistic flight paths. Insights from DroneWiS also highlight the impact of dynamic wind behavior in urban environments, where velocity variations significantly affect drone stability.

|

Evidence Description |

Findings |

|---|---|

|

Comparison of flight paths between DroneWiS and AirSim |

DroneWiS accurately simulates wind effects, while AirSim shows a smooth flight path due to lack of obstacle consideration. |

|

Replication of system failures due to wind |

DroneWiS generated insights on system robustness in windy conditions, valuable for sUAS developers. |

|

Evaluation of dynamic wind behavior in urban environments |

DroneWiS demonstrated significant wind velocity variations affecting sUAS flight paths, unlike AirSim. |

|

Observations of chaotic flight behavior |

As sUAS ascended, strong winds caused erratic flight patterns, validating the need for realistic wind modeling. |

By incorporating these elements, developers can create simulations that not only enhance training but also aid in prototyping drones for challenging environments.

State spaces and control systems

State spaces and control systems provide a mathematical framework for analyzing and stabilizing drone flight. State space modeling simplifies the implementation of control techniques by representing the drone’s dynamics through variables such as position, velocity, and angular orientation. This approach allows developers to compute equilibrium points, which are crucial for understanding the stability of the system.

Research conducted in MATLAB Simulink highlights the effectiveness of state spaces in enhancing simulation stability. Using six state variables, the study analyzed quadrotor stability and demonstrated how these variables influence equilibrium points. This analysis enables developers to predict and mitigate instability during flight, ensuring smoother and more reliable drone operation.

State spaces also facilitate the integration of advanced control systems, enabling drones to adapt to changing conditions. By leveraging this mathematical modeling, developers can design simulations that accurately reflect real-world challenges, making them invaluable for both educational and practical applications.

PID control for flight stability

Proportional-Integral-Derivative (PID) control plays a vital role in maintaining flight stability in drone simulators. This control technique adjusts the drone’s motor speeds based on feedback from sensors, ensuring that the drone remains balanced and responsive during flight. PID controllers are widely used due to their simplicity and effectiveness in achieving stable flight under varying conditions.

Extensive flight testing has validated the reliability of PID control in drone simulations. Studies comparing a baseline PID controller with an optimized piecewise controller reveal that the latter achieves faster response times while maintaining acceptable levels of overshoot and steady-state errors. These benchmarks highlight the performance advantages of PID control, making it a preferred choice for developers aiming to create stable and responsive drone simulations.

By incorporating PID control, developers can simulate real-world flight scenarios with high precision, enabling users to train effectively and test drone designs under diverse conditions.

Communication protocols for Unity and ESP32

Effective communication between Unity and the ESP32 microcontroller is essential for creating a seamless drone simulation experience. Developers rely on various communication protocols to ensure reliable data exchange and low latency. These protocols enable Unity to interact with the ESP32, process sensor inputs, and control peripherals in real time.

Common Communication Protocols

Several communication protocols are available for connecting Unity with the ESP32. Each protocol offers unique advantages based on speed, latency, and use case. The most commonly used protocols include:

-

Wi-Fi Communication:

Wi-Fi is a popular choice for connecting Unity and the ESP32. It supports high-speed data transfer and allows wireless communication over long distances. Developers can use Wi-Fi to send sensor data from the ESP32 to Unity or control the drone’s movements remotely. This protocol is ideal for applications requiring continuous data monitoring and logging. -

Bluetooth Communication:

Bluetooth provides a convenient way to establish a short-range connection between Unity and the ESP32. It is suitable for scenarios where low power consumption is a priority. Bluetooth Low Energy (BLE) is particularly useful for transmitting small amounts of data, such as joystick inputs or sensor readings, to Unity. -

Serial Communication:

Serial communication, often implemented using the RS232 data logger, is a reliable method for exchanging data between Unity and the ESP32. This protocol is widely used for debugging and data logging purposes. Developers can monitor real-time data from the ESP32 and analyze it within Unity’s environment. -

ESP-NOW Protocol:

ESP-NOW is a proprietary protocol developed by Espressif Systems for low-latency communication. It enables the ESP32 to exchange data with Unity at high speeds without requiring a Wi-Fi network. This protocol is particularly effective for applications like drone control, where rapid response times are critical.

The table below summarizes the key features of these protocols:

|

Protocol |

Max Speed |

Latency |

Use Case Description |

|---|---|---|---|

|

Wi-Fi |

54 Mbps |

10-50 ms |

Ideal for continuous data monitoring and logging. |

|

Bluetooth |

2 Mbps |

20-100 ms |

Suitable for short-range, low-power applications. |

|

Serial |

115.2 kbps |

1-10 ms |

Useful for debugging and data logging. |

|

ESP-NOW |

5 ms |

Ideal for low latency applications like music production. |

Choosing the Right Protocol

Selecting the appropriate communication protocol depends on the specific requirements of the drone simulator. For instance, developers prioritizing low latency may choose ESP-NOW, while those focusing on long-range communication might opt for Wi-Fi. The RS232 data logger remains a reliable option for scenarios requiring robust data logging and monitoring.

Unity’s flexibility allows developers to integrate multiple protocols into a single simulation setup. This approach ensures that the simulator can handle diverse tasks, such as real-time data exchange, peripheral control, and data storage. By leveraging these protocols, developers can create a responsive and immersive simulation experience.

Tip: Combining protocols like Wi-Fi and ESP-NOW can enhance the simulator’s performance by balancing speed and reliability.

Setting Up Unity and ESP32 for the Simulator

Configuring Unity for simulation

Configuring Unity for a drone simulator requires careful setup to ensure optimal performance and realistic simulation. Developers can follow a series of steps to prepare Unity for simulation tasks:

-

Install the Performance Testing package by following the official guidelines. This package helps measure and optimize simulation performance.

-

Add the package as a dependency to the project manifest located in the

<project>/Packagesdirectory. This step ensures the package integrates seamlessly into the Unity project. -

Reference

Unity.PerformanceTestingin the PlayModeTests assembly definition. This allows developers to access performance testing APIs for evaluating simulation efficiency. -

Create a new C# class named

PerformanceTests.csunderAssets/Tests/PlayModeTests. Implement a function to measure average frames per second (FPS) usingTime.deltaTimeandMeasure.Custom.

Unity’s Performance Testing package provides additional tools for analyzing simulation results. Developers can use Measure.Frames().Scope() to stabilize frame rates after loading a scene. The Performance Test Report in Unity offers insights into FPS trends, helping developers identify areas for improvement.

Tip: Regularly monitor FPS using the Performance Testing package to ensure smooth and responsive simulations.

Setting up the ESP32 microcontroller

Setting up the ESP32 microcontroller involves preparing the hardware and firmware for integration with Unity. Developers can begin by selecting the appropriate ESP32 variant based on project requirements. For drone simulators, models like the ESP32-WROOM-32 or ESP32-WROVER are ideal due to their robust processing capabilities and memory support.

To configure the ESP32, developers must install the ESP-IDF (Espressif IoT Development Framework). This framework provides essential tools for building and flashing firmware onto the microcontroller. The setup process includes:

-

Installing ESP-IDF: Download and install the ESP-IDF framework from the official Espressif website. Ensure the environment variables are correctly configured.

-

Building the firmware: Compile the custom firmware using the ESP-IDF build system. This firmware should include Unity-specific tests and support for peripherals like sensors and controllers.

-

Flashing the firmware: Load the compiled firmware onto the ESP32 using tools like

esptool.py. This step prepares the microcontroller for communication with Unity.

Developers can test the firmware by running Unity-specific tests, such as button interactions or sensor data processing. These tests validate the microcontroller’s readiness for simulation tasks.

Note: Always use the latest version of ESP-IDF to access updated features and ensure compatibility with Unity.

Establishing communication between Unity and ESP32

Establishing a reliable connection between Unity and the ESP32 is crucial for real-time data exchange and peripheral control. Developers can choose from several communication protocols based on project needs. Wi-Fi and Bluetooth are popular options for wireless communication, while ESP-NOW offers low-latency data transfer.

To set up communication, developers can follow these steps:

-

Build the test firmware using the ESP-IDF build system. Include Unity tests for components like buttons or sensors.

-

Flash the firmware onto the ESP32 device. This step ensures the microcontroller is ready to interact with Unity.

-

Execute a

pytestscript, such aspytest_button.py, to automate the initialization and testing process. This script validates the connection and ensures data flows seamlessly between Unity and the ESP32.

Unity’s flexibility allows developers to integrate multiple protocols into a single simulation setup. For example, Wi-Fi can handle continuous data monitoring, while ESP-NOW ensures rapid response times for drone control. Developers can also use serial communication for debugging and data logging purposes.

Tip: Combine Wi-Fi and ESP-NOW protocols to balance speed and reliability in drone simulations.

By following these steps, developers can create a robust communication framework that supports interactive and immersive simulation experiences.

Testing and debugging the setup

Testing and debugging are essential steps in ensuring the drone simulator operates efficiently and accurately. Developers must evaluate both the Unity simulation environment and the ESP32 microcontroller to identify and resolve potential issues. A systematic approach to testing and debugging can help uncover vulnerabilities, optimize performance, and improve the overall reliability of the simulator.

Testing the Unity Simulation Environment

Unity provides several tools and techniques for testing the simulation environment. Developers can use these tools to verify that the virtual drone behaves as expected under various conditions. Key testing strategies include:

-

Performance Testing: Developers should monitor the simulator’s frame rate and responsiveness using Unity’s Performance Testing package. This ensures the simulation runs smoothly without lag or stuttering.

-

Scenario Testing: Creating diverse scenarios, such as windy environments or obstacle-filled terrains, helps evaluate the drone’s behavior in challenging conditions.

-

Behavioral Testing: Testing the drone’s response to user inputs, such as joystick movements, ensures accurate control and interaction.

Tip: Use Unity’s built-in debugging tools, such as the Console window, to identify and fix errors in scripts or configurations.

Debugging the ESP32 Microcontroller

The ESP32 microcontroller requires thorough testing to ensure it communicates effectively with Unity and processes data from peripherals accurately. Developers can follow these steps to debug the ESP32:

-

Firmware Validation: Test the custom firmware on the ESP32 to verify that it supports all required functionalities, such as sensor data processing and communication protocols.

-

Peripheral Testing: Connect peripherals like gyroscopes, accelerometers, and joysticks to the ESP32 and test their functionality individually.

-

Communication Testing: Use tools like serial monitors or Wi-Fi analyzers to check the data exchange between the ESP32 and Unity.

Troubleshooting Strategies

Effective troubleshooting involves identifying the root cause of issues and implementing targeted solutions. Developers can use the following strategies to address common problems:

-

Error Logging: Enable detailed error logs on both Unity and the ESP32 to capture information about failures or unexpected behavior.

-

Incremental Testing: Test individual components, such as sensors or communication protocols, before integrating them into the full simulator.

-

Stress Testing: Simulate extreme conditions, such as high data loads or rapid user inputs, to evaluate the system’s robustness.

Case Studies and Metrics for Debugging

Case studies provide valuable insights into effective debugging strategies. For example, researchers have generated challenging test cases to identify vulnerabilities in the PX4 avoidance system. These tests classify failures as either hard or soft based on collision and safety distance criteria:

-

A hard fail occurs when the drone collides with an obstacle.

-

A soft fail happens when the drone fails to maintain a minimum safe distance of 1.5 meters from obstacles.

By analyzing these metrics, developers can refine their testing processes and improve the simulator’s reliability.

Note: Always document test results and debugging steps to track progress and identify recurring issues.

Final Testing and Optimization

After addressing all identified issues, developers should conduct a final round of testing to ensure the simulator meets performance and functionality requirements. This includes:

-

Verifying that the drone’s flight dynamics align with real-world physics.

-

Ensuring seamless communication between Unity and the ESP32.

-

Testing user interactions, such as joystick controls, for accuracy and responsiveness.

By following these testing and debugging practices, developers can create a robust and reliable drone simulator that delivers an immersive and educational experience.

Enhancing the Simulator with ESPHome and Peripherals

Using ESPHome for peripheral integration

ESPHome simplifies the integration of peripherals into the drone simulator by providing a user-friendly platform for configuring and managing connected devices. The ESPHome framework allows developers to write YAML-based configurations, enabling seamless communication between the ESP32 and various hardware components. This approach eliminates the need for complex coding, making it accessible for beginners and professionals alike.

Several examples highlight the effectiveness of ESPHome in managing peripherals:

-

The ESPClicker module, based on the ESP8266, automates non-smart devices by remotely activating buttons. It uses three relays to connect to existing hardware, streamlining automation processes.

-

Developers can use ESPHome to integrate sensors, controllers, and other peripherals into the simulator, ensuring smooth operation and real-time data exchange.

By leveraging ESPHome, developers can enhance the simulator’s functionality while reducing development time and effort.

Adding sensors like gyroscopes and accelerometers

Sensors like gyroscopes and accelerometers play a crucial role in creating a realistic drone simulation. These sensors provide essential data on motion, orientation, and acceleration, enabling accurate modeling of drone behavior. The ESP32 processes this data in real time, ensuring the simulator responds dynamically to changes in flight conditions.

Key specifications of these sensors include:

-

Accelerometer: Offers acceleration ranges of ±2 g, ±4 g, ±8 g, and ±16 g. Its low-pass filter bandwidth ranges from 1 kHz to <8 Hz, ensuring precise measurements.

-

Gyroscope: Features switchable ranges from ±125°/s to ±2000°/s. Its low-pass filter bandwidths range from 523 Hz to 12 Hz, providing high accuracy and responsiveness.

Integrating these sensors into the simulator enhances its realism, making it an invaluable tool for training and testing drone designs.

Integrating joysticks and controllers

Joysticks and controllers add an interactive element to the drone simulator, allowing users to experience realistic flight controls. The ESP32 facilitates the integration of these peripherals by processing input data and relaying it to the simulation environment.

Users have reported significant improvements in their drone skills after integrating joysticks into simulators. For example:

-

Transforming a flight controller into a joystick provides a wireless connection with low latency, mimicking real-world flying conditions.

-

Configuring simulator settings, akin to fine-tuning drone controls, creates an authentic training experience.

Careful calibration of joysticks and controllers ensures accurate input mapping, enabling users to practice complex maneuvers with confidence.

Expanding functionality with additional hardware

Adding additional hardware to a drone simulator can significantly expand its capabilities. Developers can integrate components like cameras, GPS modules, and environmental sensors to create a more immersive and functional simulation. These enhancements allow users to explore advanced features such as aerial photography, navigation, and environmental monitoring.

Examples of Additional Hardware

-

Cameras: Cameras enable real-time video streaming and image capture. They simulate aerial photography and provide a first-person view (FPV) experience.

-

GPS Modules: GPS modules add navigation capabilities. They allow users to simulate waypoint-based flight paths and test autonomous navigation systems.

-

Environmental Sensors: Sensors like temperature, humidity, and air pressure modules simulate environmental conditions. These components are essential for testing drones in diverse scenarios.

Role of ESPHome in Hardware Integration

Esphome simplifies the process of integrating additional hardware into the simulator. Its YAML-based configuration system allows developers to define hardware settings without complex coding. For instance, esphome can configure a GPS module to send location data to the ESP32, which then relays it to Unity for visualization. Similarly, esphome can manage environmental sensors, ensuring seamless data exchange between the hardware and the simulation environment.

Tip: Use esphome’s automation features to trigger specific actions based on sensor data, such as simulating weather changes in Unity.

By leveraging esphome and additional hardware, developers can create a versatile and realistic drone simulator. These enhancements not only improve the user experience but also broaden the simulator’s applications in education, research, and prototyping.

Applications and Use Cases

Educational tools for drone training

Drone simulators serve as valuable educational tools for training purposes. They provide learners with a safe and controlled environment to practice flight operations without risking physical equipment. By simulating real-world conditions, these tools enhance the learning experience and improve performance. For example, simulators can replicate challenging scenarios like strong winds or obstacle-filled terrains, allowing users to develop critical problem-solving skills.

The historical use of flight simulators for pilot training demonstrates their effectiveness. Over the past 80 years, simulators have proven to be reliable platforms for skill development. Modern drone simulators build on this legacy by incorporating advanced features like reinforcement learning, which helps users optimize their flight strategies. Bridging the reality gap through enhanced realism further improves training outcomes, making simulators indispensable for educational purposes.

|

Evidence Description |

Key Insights |

|---|---|

|

Access to simulation technology can increase real-world application performance, efficiency, and learning experience. |

Highlights the practical benefits of using drone simulators in training. |

|

The goal of reinforcement learning is to learn an optimum policy to achieve the maximum reward. |

Emphasizes the importance of establishing standards for evaluation metrics in training. |

|

Bridging the reality gap requires enhancing the realism of simulators. |

Suggests that improving simulator realism can lead to better training outcomes. |

|

A flight simulator has been widely used for 80 years to train a pilot. |

Provides historical context for the effectiveness of simulators in pilot training. |

Prototyping and testing drone designs

Drone simulators offer a practical solution for prototyping and testing new designs. Developers can use simulators to evaluate the performance of drones under various conditions before physical assembly. This approach reduces costs and accelerates the design process. Simulators also allow for iterative testing, enabling developers to refine their designs based on observed behavior.

Advanced simulation interfaces, such as adaptive augmented reality (AR), enhance the testing experience. These interfaces provide detailed insights into drone performance metrics, including flight path deviations and defect percentages. For example, adaptive-AR interfaces can simulate real-world conditions, helping developers identify design flaws and optimize drone stability.

|

Interface Condition |

Percentage of Marked Defects |

Deviation from Flight Path |

|---|---|---|

|

2D-only |

Data not specified |

Data not specified |

|

Full-AR |

Data not specified |

Data not specified |

|

Adaptive-AR |

Data not specified |

Data not specified |

Simulators equipped with the esp32 microcontroller further enhance prototyping capabilities. The esp32 processes real-time data from sensors, providing developers with accurate feedback on drone performance. This integration ensures that prototypes meet design specifications and perform reliably in diverse environments.

Research in robotics and IoT

Drone simulators play a crucial role in advancing research in robotics and IoT. Researchers use simulators to study complex flight dynamics, test control algorithms, and explore new applications for drones. For instance, simulators can model interactions between drones and IoT devices, enabling researchers to develop innovative solutions for smart cities and industrial automation.

The esp32 microcontroller supports these research efforts by facilitating real-time data exchange between drones and IoT systems. Its versatility allows researchers to integrate sensors, cameras, and other peripherals into simulation setups. This capability enables detailed analysis of drone behavior and interaction with IoT networks.

Simulators also contribute to the development of autonomous drones. By testing algorithms in virtual environments, researchers can refine navigation systems and improve decision-making processes. These advancements have significant implications for industries like agriculture, logistics, and disaster management, where drones play an increasingly important role.

Entertainment and gaming scenarios

Drone simulators have found a unique place in the world of entertainment and gaming. These simulators provide users with an immersive experience, allowing them to explore the thrill of flying drones in virtual environments. Developers can design engaging scenarios, such as drone racing, obstacle courses, or aerial combat missions, to captivate players and challenge their skills.

One popular application involves creating multiplayer drone racing games. Players can compete in real-time, navigating through intricate tracks filled with sharp turns, tunnels, and dynamic obstacles. Unity’s advanced graphics engine enhances these experiences by rendering realistic environments, such as urban landscapes or dense forests. The esp32 microcontroller plays a vital role in these setups by processing input from joysticks or controllers and ensuring smooth gameplay.

Another exciting use case is first-person view (FPV) drone simulations. These games replicate the perspective of flying a drone through a camera feed, offering players a highly immersive experience. The esp32 enables real-time data exchange between peripherals and the simulation, ensuring responsive controls and accurate feedback. This setup allows players to practice complex maneuvers, such as flips and rolls, in a safe virtual space.

Developers can also integrate augmented reality (AR) elements into drone simulators. For example, AR overlays can add virtual targets or collectibles to real-world environments, blending physical and digital gameplay. This feature expands the possibilities for interactive gaming, making drone simulators a versatile tool for entertainment.

By combining Unity’s capabilities with the esp32’s hardware integration, developers can create engaging and realistic gaming experiences. These simulators not only entertain but also help players develop valuable skills, such as hand-eye coordination and spatial awareness.

Building a drone simulator with Unity and the ESP32 microcontroller involves several essential steps. Developers can start by downloading tools like Blender and Unity 2017, followed by cloning repositories that support features such as velocity control and PID-based custom physics. Opening the Unity project, running the simulation, and configuring build settings complete the software setup. On the hardware side, learning ESP32 basics, leveraging its wireless capabilities, and integrating sensors like gyroscopes and accelerometers form the foundation for a functional simulator.

Peripherals and esphome significantly enhance the simulation experience. Esphome simplifies the integration of hardware components, enabling seamless communication between the ESP32 and devices like joysticks or environmental sensors. This framework reduces complexity, allowing developers to focus on refining their project. By combining esphome with Unity’s advanced simulation tools, users can create realistic and interactive environments for training, prototyping, and entertainment.

Experimentation remains key to innovation. Developers are encouraged to explore new ideas, expand functionality with additional hardware, and push the boundaries of what their project can achieve. Whether for education, research, or gaming, the possibilities are endless when combining Unity, the ESP32, and esphome.

What is the role of the ESP32 microcontroller in a drone simulator?

The ESP32 microcontroller processes real-time data from sensors and peripherals. It enables communication between hardware components and Unity, ensuring accurate simulation of drone behavior. Its wireless capabilities also support remote control and data exchange.

Can Unity simulate real-world drone flight conditions?

Yes, Unity can simulate real-world conditions like wind, terrain, and obstacles. Its physics engine models aerodynamics and environmental factors, creating realistic scenarios for testing and training purposes.

How does ESPHome simplify hardware integration?

ESPHome uses YAML-based configurations to connect peripherals like sensors and joysticks to the ESP32. This approach eliminates complex coding, making it easier to integrate hardware into the simulator.

What types of sensors are essential for a drone simulator?

Gyroscopes and accelerometers are essential. They provide data on motion, orientation, and acceleration, enabling accurate modeling of drone dynamics. Additional sensors, like GPS modules, can enhance navigation features.

Why are communication protocols important in a drone simulator?

Communication protocols ensure seamless data exchange between Unity and the ESP32. Protocols like Wi-Fi, Bluetooth, and ESP-NOW enable real-time control, sensor data processing, and peripheral interaction, enhancing the simulator’s responsiveness.

See Also

Harnessing AI Edge Computing for Robotics with RV1126

Simplified Engine Control Solutions Using SPC56 Microcontrollers

Fundamental Programming Skills for MC9S12XD256 Microcontrollers

Using STM32F103C8T6 MCUs for Bluetooth Robot Control

Three Effective Methods for Integrating MC9S12XET512VAG